How to send Docker logs to Google Cloud Logging

In the last weeks we migrated most of our infrastructure to Google Cloud using many of the provided services (Kubernetes Engine, Stackdriver Monitoring, Container Registry, Cloud SQL, …).

Before we used logspout to aggregate all the logs generated in our Docker containers on papertrail. So we were quite happy to see that there is a good log aggregation service included out of the box for applications running on Google Kubernetes Engine (GKE).

Having most of the logs inside of Google Cloud Logging we were wondering if it is possible to ditch papertrail completely and to redirect the log from all our Docker containers to Google Cloud Logging even if they are running on external servers.

It turns out that the Google Cloud Logging driver (gcplogs) is an official Docker logging driver, already included in the Docker daemon. So here is what we had to do:

1. Create a service account in Google Cloud

This account will be used by the docker daemon to authenticate with Google Cloud. This page describes how to create a service account. Do not give him the role Owner as described in the documentation, all this account needs is the role Logs Writer.

2. Configure the docker daemon

This was the most tricky part. In the documentation we found it was always stated that the gcplogs driver uses the environment variable GOOGLE_APPLICATION_CREDENTIALS to look for the JSON key file generated in step 1.

Since the docker daemon is controlled by systemd on our Ubuntu installations we were able to configure the authentication environment variable as described here: in the section [Service] of the file /lib/systemd/system/docker.service we added the line

Environment="GOOGLE_APPLICATION_CREDENTIALS=/path/to/key.json"To bring this change into effect it is required to run the following commands:

sudo systemctl daemon-reload

sudo systemctl restart dockerNow we are able to run docker containers and redirect their logs to Google Cloud by using the command line arguments like:

docker run -d --log-driver=gcplogs \

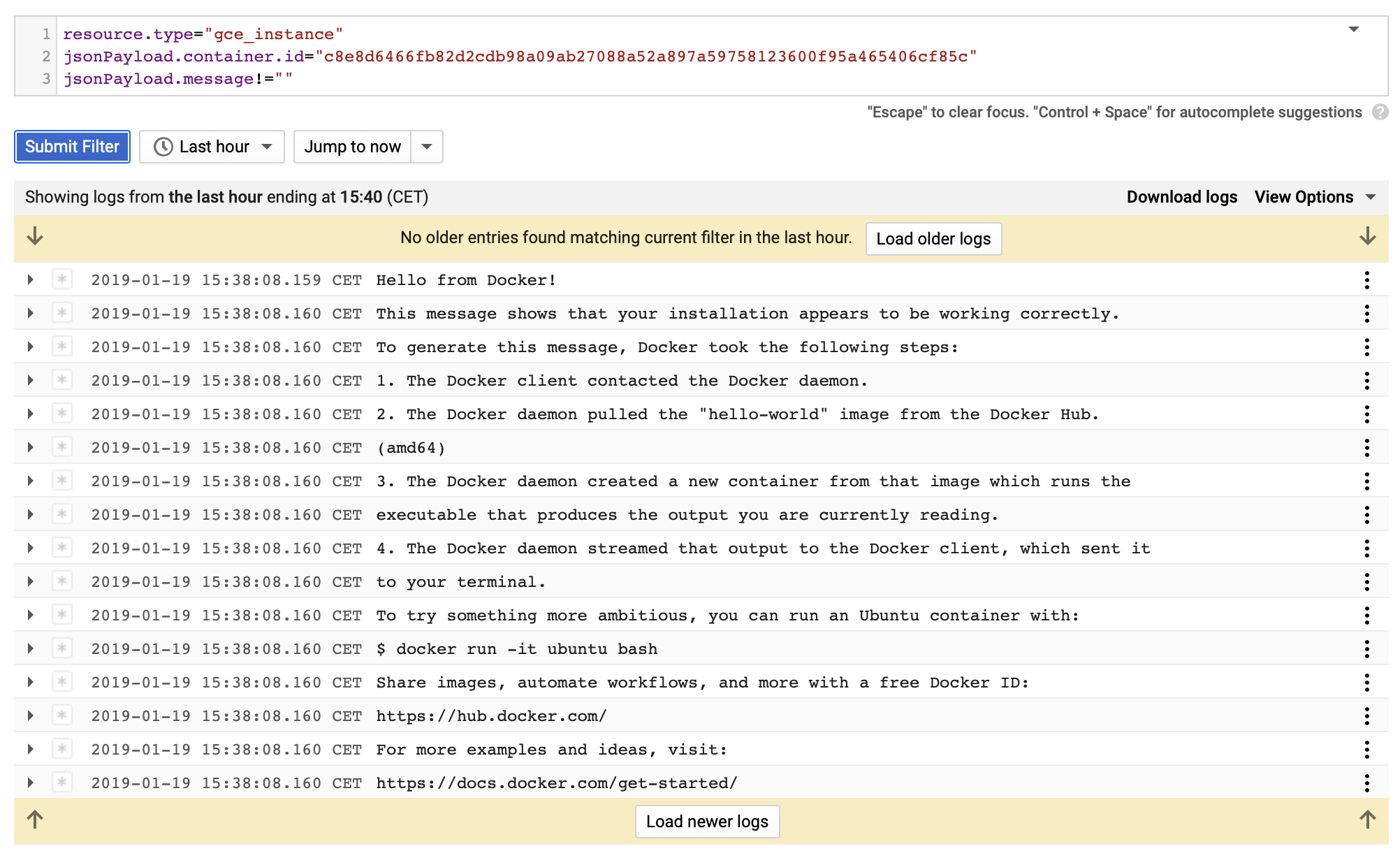

--log-opt gcp-project=google-cloud-project hello-worldWe can find the logo out put in the Google Cloud Logging section as if the were generated by a GCE VM Instance:

If you did not set the environment variable correctly in the /lib/systemd/system/docker.service file you will get an error like this:

docker: Error response from daemon: failed to initialize logging driver: google: could not find default credentials. See https://developers.google.com/accounts/docs/application-default-credentials for more information.3. Make gcplogs the default

We wanted to use gcplogs as the default log driver for all the docker containers running on our servers. This can be done by adapting the file /etc/docker/daemon.json:

{

"log-driver": "gcplogs",

"log-opts": {

"gcp-meta-name": "servername",

"gcp-project": "google-cloud-project"

}

}This way we do not need to add log-driver and log-opt arguments when running our docker containers.

Conclusion

Having all the log output piped into Google Cloud Logging allowed us to set up metrics very easily (for example number of HTTP requests to certain services) and display them in Google Cloud Stackdriver Monitoring.

Since all our team members already have Google Accounts it was also very easy to give them access (role Logs Reader) to the logs generated by our various applications, so they can look into them on their own, which frees up resource on the operations team.